Learning to See from Scratch

When I look out of the window behind my desk, why is the landscape so perfectly partitioned into different objects? Why do I see trees, cars, flowers and houses instead of just a swirling blur of particles or a chaotic kaleidoscope of colours? Human beings seem to have an effortless ability to turn raw visual data into meaningful objects in an integrated scene.

What criteria do we use to decompose raw visual data into objects? One obvious constraint is that the set of objects should faithfully represent what is really there. Our ability to survive in dangerous environments would be greatly impaired if relevant objects were omitted or represented in a misleading way. A faithful object decomposition should create a reliable foundation for reasoning and problem solving.

But for any scene, there are many possible faithful object decompositions. Take these stacks of chairs for example.

When moving a stack of chairs to another room it is useful to group information about the individual chairs together as a single object. On the other hand, when the goal is to count each of the individual chairs, a more fine-grained decomposition is preferred (and perhaps when repairing a chair an even more fine-grained decomposition is needed). - On the Binding Problem in Artificial Neural Networks

The best choice of objects and the appropriate granularity of decomposition depends on what is most useful for the particular problem at hand. For challenging problems, the large number of decompositions necessitates visual search over the possibilities to find the one that fits best, and with ambiguous scenes like the illusion below, your visual system may just flick restlessly back and forth without settling on a winner.

ARC

The reason why I’ve been thinking about vision and perception is because I got tired of just grinding math and decided to work on a concrete project for a bit, so for the past month I’ve been working on a machine learning model to solve ARC tasks.

If you aren’t familiar with ARC, it stands for the “abstraction and reasoning corpus” which is a dataset of grid-based reasoning tasks designed to assess an AI's capacity to understand and apply abstract concepts without relying on extensive prior knowledge. Solving these tasks using AI has been turned into a competition hosted by François Chollet and Mike Knoop with a $600,000 prize available for whoever is able to create a model that can solve 85% of the tasks.

Each ARC task is like a visual IQ test question. The input is a set of 3-5 pairs of example grids followed by a test grid. Your model should infer the common pattern between the example input-output pairs and apply it to the test input to predict the corresponding output grid.

Based on this context, the model should infer the following output grid:

There’s a lot of variety in the kinds of tasks and some of them are quite challenging even for humans. You can check out the tasks using this online viewer and try to solve a couple yourself.

Now one month wasn’t nearly enough time for me (a noob) to build a machine learning model good enough to solve full ARC challenges, so I won’t be getting the $600,000 prize any time soon. But I made some progress and I’d like to share my current approach and what I’m going to try next.

Perception

Solving an ARC task can roughly be broken down into two steps: perception and reasoning, so the first challenge is to create a model that can partition a grid into meaningful objects.

One thing you could do is feed the model human-annotated grid partitions and reward it for learning human object decompositions. But not only would that require a lot of boring manual effort to create the data, it would also result in a much less interesting model. I want to know whether a model can learn useful object representations by itself, not just copy mine.

But starting from scratch is quite daunting. Why? Well consider how many possible object decompositions there are for a simple 4x4 grid.

Since you can think of the grid as a set of 16 cells, the number of object decompositions is equivalent to the number of possible partitions of a 16 element set. Asking about the size of the set of all partitions is like asking how many unique ways you could smash a plate such that all the pieces could still be glued back together into the original shape.

The number of possible partitions of a set of elements is described by the Bell number B(16), which is over 10 billion! Since ARC grids can contain up to 900 cells, brute force search over all possible partitions would quickly become intractable.

But when humans look at a scene we generally only consider partitions into between 2 and 7 objects, a range that is likely related to our working memory capacity. If humans can solve ARC challenges under these constraints, then we should assume our model could as well.

We can count partitions of a certain size using the so-called Stirling numbers of the second kind. The number S(16, 3) is the number of ways 16 cells can be partitioned into 3 objects. If we want to find the number of possible partitions between 2 and 4 objects, we can simply sum S(16, 2) + S(16, 3) + S(16, 4) which gives a number 100x smaller than the Bell number B(16).1

Still, 100 million partitions is a lot to check, and the vast majority of partitions will be useless and uninteresting. For instance, this is one of the elements in S(16, 3).

To our human eyes, it just doesn’t feel meaningful. It ignores important information like the shape and colour information in the grid.

But this partition on the other hand feels much more useful because it segments the grid into two similar objects and the background.

So the problem of perception is how to create a model that can learn to pick out the few meaningful object partitions from the hundreds of millions (or billions) of useless ones.

Slot Attention

I experimented with an object-centric approach. My model starts by extracting features from the grids using a vision transformer (ViT). I was somewhat familiar with transformers already because I knew they were used for large language models, but they can also be used for non-textual inputs with structure like images. You can encode ARC-style grids of colours by flattening them into sequences of tokens and adding 2D coordinate position information to each token.

The tokens get passed into the transformer’s self-attention mechanism, followed by the slot attention module. The slot attention module is the key part of the model that enables the model to learn to recognise objects. You can think of the slots in slot attention as empty registers in working memory that can focus on different features of the input grid.

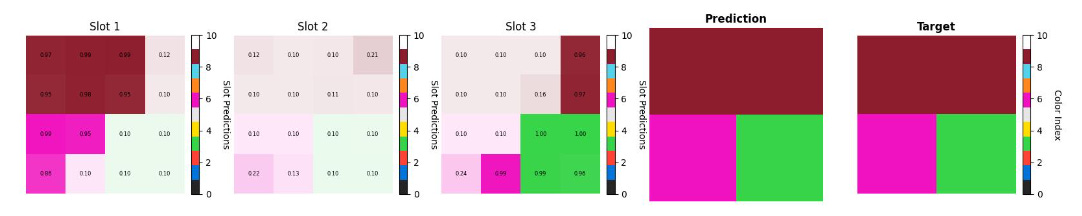

The slots compete with each other to focus on various features from the input like colour, shape and spatial position, which has the effect of dividing the input grid between the slots. At the beginning of training, it looks like nothing is happening - the slots look quite homogenous and there isn’t any differentiation between the objects at each slot position.

But gradually over training they learn to divide up the input grid between themselves. Because they see many thousands of grids during training and the slot attention module forces them to partition each input grid into a small number of slots, the model learns to pick up on common grid patterns that help it reconstruct the input grid.

By the end of training, you start to see some interesting partitions. For example, this one looks fairly logical. It segments the grid by colour to produce three meaningful objects.

Likewise with this grid, there are multiple ways you could logically partition it, but this one which divides the grid horizontally into a top and bottom piece feels pretty reasonable.

But there were plenty of unusual examples too.

Perception and Reasoning

The partitions above were only evaluated based on how well they were able to successfully reconstruct the input grid. But being able to reconstruct the input grid from a partition is a necessary but insufficient pre-requisite to having a good model. There’s actually a hierarchy of partition usefulness summarised in the image below.

The largest subset of partitions and the one that is easiest to train a model for is the what we’ve already discussed above - the ability to partition and reconstruct a single input grid. But ARC tasks are composed of multiple example input-output pairs. So a further constraint is that we only care about grid partitions that are in some way consistent or analogous across each input grid. It would be useful to be able to extract and match analogous objects that play the same “role” between the input grids and output grids and use that information for downstream reasoning.

I did some preliminary experiments here by tweaking the slot attention module’s initialisation strategy. I initialised the slots by extracting and combining features from all of the input grids using cross-attention. Then I ran the model independently over each grid with the shared slot initialisations. This meant that the model could semi-reliably extract similar objects at each slot index. Here are a couple of examples.

Overall I’m quite skeptical of this shared initialisation approach working beyond simple grids. It seems to heavily rely on spatial similarity as opposed to more abstract similarities. It’s likely that matching analogous objects for more complex ARC tasks would require a more sophisticated approach.

Returning to the hierarchy, the gold standard determinant of partition quality is whether the object partition the model finds actually helps you perform the downstream reasoning task. I ran out of time to perform any concrete experiments, but here are some vague ideas.

One of the benefits of object decomposition is that it allows us to perform compositional reasoning, meaning we can divide up a scene into objects, perform mental or physical operations on them independently and combine them into a solution. This divide-and-conquer approach makes the problem solving process simpler because each step requires less cognitive horsepower compared to trying to perform a single mega-operation over a complex object.

What this might look like practically is using an object-centric model to partition the input and output grids, matching the corresponding objects between the inputs and outputs, then having an LLM or symbolic reasoning engine write a simple program to perform the transformations over each set of analogous objects, checking that they succeed on the example input-output pairs before finally applying them to the test input grid. More experimentation here is needed!

My plans are to take a break from working on ARC to learn more about approaches to reasoning like discrete program search, LLM-based program sampling and DreamCoder. Excited to share more about that soon!