Progress (or lack thereof)

What did I get done over the past few months?

It’s been 5 months since I quit my job, here’s a summary and reflection on what I’ve been up to so far.

Overall I would say that there have not been many “wins”. I realise this sounds quite negative. Everyone wants to feel some form of positive feedback from the universe that they are not just wasting their time, and if you quit your job, you are going to feel additional pressure to make sure the opportunity cost of not working and earning money is worth it. How should you respond when it feels like you aren’t getting anywhere? Righteous indignation? Blame conspiracy or fate for condemning you to fail?

Unfortunately you can probably rule those out. Absent manipulation, markets (for attention, ideas, labor, etc) give honest feedback and aren’t designed to torture you in for no reason. In other words, if you aren’t getting the results you were hoping for, you know who to blame.

Again, this is overly negative and by the end of this essay I hope to persuade myself that it’s actually a cause for optimism.

Summary

Learning

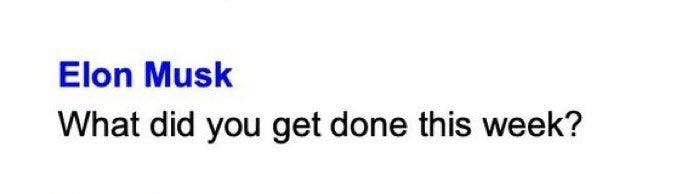

Math, textbooks, MathAcademy (~1.5 months)

Quickly began to feel like fake pre-requisites I’d invented for no reason

Why not work on real problems and use AI to backfill knowledge?

Bounties and Competitions

A (bad) attempt at ARC (~3 weeks)

Learnt a ton - training NNs, implementing papers, running experiments…

Justified the idea that working on hard, interesting problems is The Way™

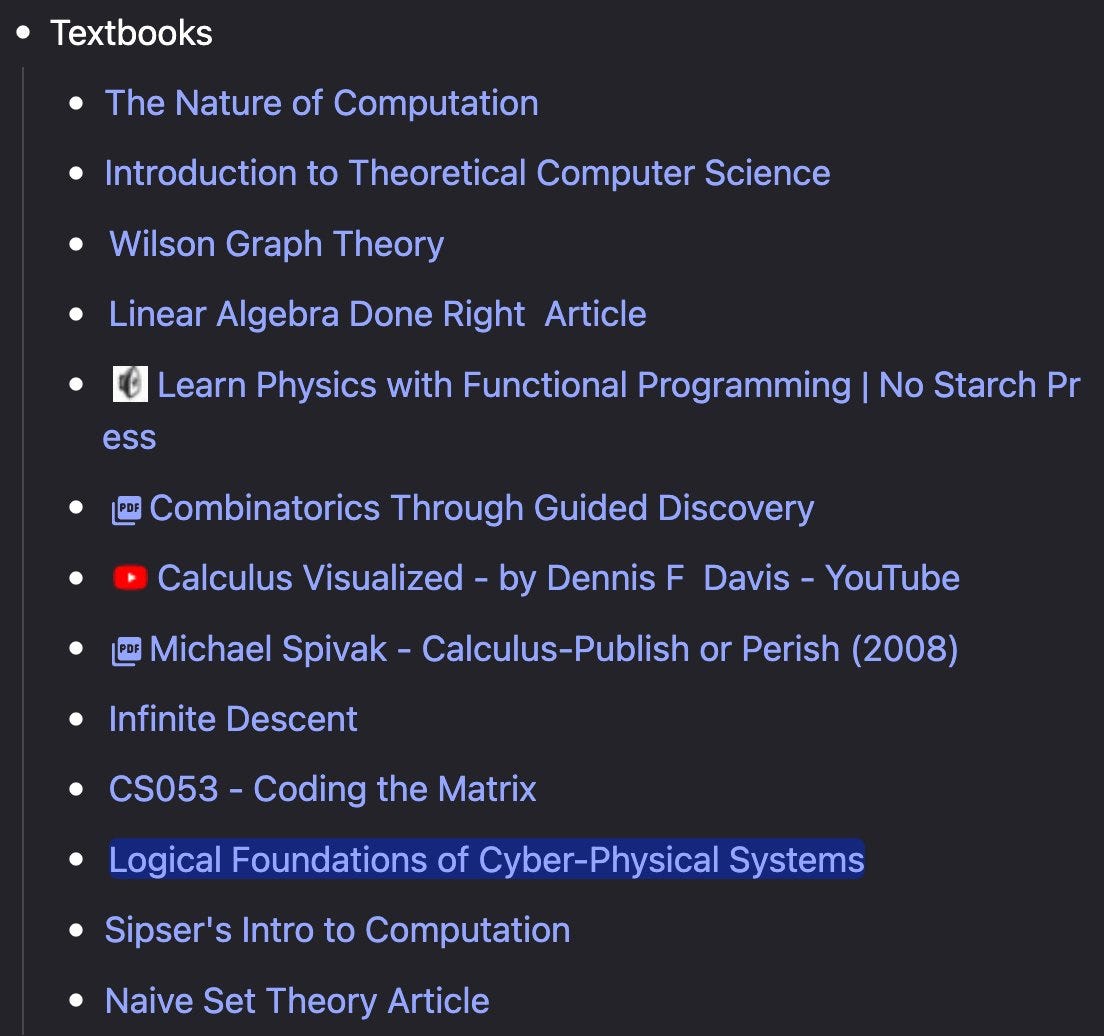

(Slow) HVM3 optimisation (~1 day)

Managed to get a +10% speedup but the bounty was for at least +50%

Again, justified working on hard, interesting problems > textbooks

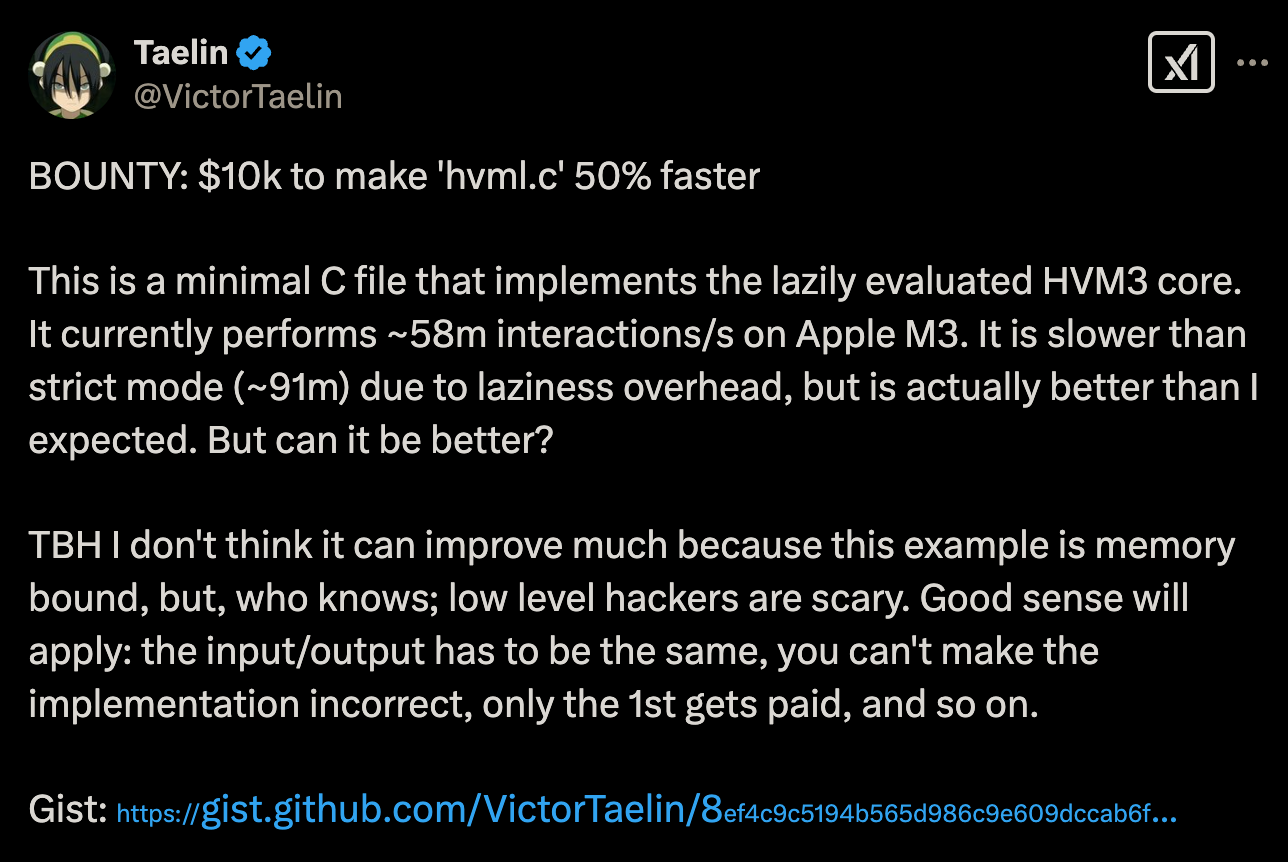

(Slow, buggy) parallel hashing algorithms for TinyGrad (~2 months)

Tried to implement SHA3, showed that it can’t be parallelised

Implemented BLAKE3, wrestled TinyGrad’s CI, fixed GPUOcelot bug

Spent too long, stick to 2 weeks max for experiments/bounties

Found and fixed a bug in GPUOcelot’s PTX ⇒ LLVM compiler (~1 week)

This was actually a win IMO

Starting from ~zero knowledge of assembly, compilers, PTX, C++…

A (failed) refactor bounty for HVM3 (2 weeks)

Built a code context parsing tool for Haskell and C using tree-sitter

Used DSPy, active learning and LLM judges to create optimised prompts

Couldn’t reach accuracy/precision requirements

Other Misc. Explorations

Now: DSPy-inspired library for text-based gradient descent

Math

The thing I was most interested in when I quit my job was going deeper into learning math. I had already been spending all of my evenings and weekends on this before I decided to leave my job and I’d made solid progress through a number of undergrad textbooks. I even tried to make my own mini logic and proofs course using the Lean theorem prover.

So from the beginning I spent a lot of time going through math textbooks. I was also lucky to learn about MathAcademy pretty much immediately after quitting and interviewed one of the cofounders multiple times.

But after a couple of months I started to lose faith in studying math for math’s sake. Learning aimlessly divorced from a concrete problem lost its appeal. I didn’t create any interesting artefacts or “proof of learning” during that period (apart from flashcards) which also weighed on me. It felt like I was just fulfilling a sort of made up prerequisite. Why wasn’t I trying to be creative or solve real problems instead?

Was it short-termist to abandon learning in favour of problem solving? I felt (and still feel) this persistent stress caused by the idea that I’m on borrowed time, combined with the pressure to act that probably all of us are feeling due to advancements in AI.

I do genuinely think AI has changed the optimal strategy here. 1) It’s getting so much easier to backfill knowledge as you work on something. 2) AI updates so often that what you learn today may not be relevant in 6 months. 3) I think the main bottleneck is problem discovery and valuation which is a function of knowledge/learning, but probably not textbook/course learning.

HVM3 Optimisation Attempt

I looked over my list of potential projects and ideas and picked something I stumbled across on Twitter - a bounty to optimise a part of the HVM3 codebase. One of the things I was very keen on gaining from this period was deeper technical abilities and this seemed like a good way to work towards that.

I felt like I was able to quickly go from knowing nothing about the problem to actually making a reasonable attempt - I managed to speed it up ~10% or so and tried some other plausible things that didn’t work out. I looked at solution attempts from more experienced people on their Discord which showed me my ideas were reasonable.

To me it validated the idea that you can make much more progress than you would expect by just picking something hard and grinding at it.

ARC

Next I saw that the ARC competition was coming to a close but there might still be time to make a rushed submission. I worked on a ViT using slot attention in an attempt to create a model that could learn to partition the input grids into meaningful objects. I did a complete write up here.

In just 3 weeks I managed to learn a ton including a lot of tacit knowledge I couldn’t have gotten from textbooks or courses. It felt like a much better investment than reading a textbook, even though the end result was obviously no where near a viable solution to ARC. I reused a lot of the ideas I learned here later on to fix the GPUOcelot bug and when working with DSPy.

TinyGrad Parallel Hashing Algorithms

Then I remembered that TinyGrad has a public list of bounties. I’d been following the project casually because I used to watch George’s streams when I was starting out learning programming. I picked one of the $200 bounties which was to implement a parallel SHA3 algorithm in TinyGrad for hashing machine learning models using GPUs.

Unfortunately after 2 weeks it turned out that this is just impossible because SHA3 is just inherently sequential.

George updated the bounty, so I decided to give the BLAKE3 algorithm a shot.

This was more involved. I spent ages getting CI tests to pass on all of the different devices (Metal, CPU, Nvidia, AMD, …) TinyGrad supports. I ran their GitHub actions workflow locally and found a bug in one of the libraries they use to test the Nvidia device backend in CI. So as a side quest I spent around a week fixing that and managed to get it merged which was pretty cool given that I was starting from ~zero knowledge of assembly, compilers, PTX, C++ etc.

After that I spent ages trying to fix the final issues with BLAKE3 but in the end it started to feel a bit futile.

This was a recurring challenge I found while working on bounties. How do you know when to give up vs stubbornly persisting? One part of your brain will criticise you for being weak if you give up. The other half will remind you that every additional week you spend without making progress is a week you could have spent doing something more promising. I think this is something you can only really calibrate by trying and failing (there’s no way you can learn this from a book or course). After writing this I’ve settled on a heuristic of 2-3 weeks max for uncertain experiments/bounties.

HVM3 Refactor Bounty

Another HVM3 bounty. This time it was to develop an AI tool that given a snapshot of HVM3's codebase and a refactor request could correctly determine which chunks of code must be edited.

I wanted to see how well a small, cheap model could do at this task. I wrote a context parser class that used tree-sitter to parse the C and Haskell code and provide accurate context and used DSPy to write and optimise the prompts with an active learning-ish approach to mining synthetic data.

This was pretty fun, it used a lot of pretty cutting-edge ideas for building LLM programs like generating synthetic data, active learning, automated prompt engineering etc. It’s given me a solid understanding of how these general techniques can be used to solve problems and what their current limitations are. I’m very confident I will return to these ideas in the future. But again the accuracy and precision just weren’t good enough to reach the bounty’s target.

Final Reflection

Had I persisted with the math courses would I feel better off now? Certainly I would have gotten some grades or some other form of tangible feedback from the courses or textbooks to tell me that I was making progress. But progress towards what? I want to be able to solve difficult problems, create useful ideas, become more technical, build a company, do something interesting. I think creating unnecessary indirection in the way of that is just bad idea.

Ultimately the feedback you get from really tricky, uncertain problems like public bounties and competitions is going to be ruthlessly honest with no sugar-coating. If you suck, it’s because you actually suck. But that’s cause for optimism because it means that in terms of progress there is plenty of low hanging fruit yet to be picked. Looking at the quality of the work I did at the beginning compared to recently, there is clear improvement, I am capable of things I was not 5 months ago. I am confident that if I keep going I will just get better and better.

Next Steps

That’s all of the main stuff I worked on over the past few months. So what’s next?

“People, ideas, machines — in that order!”

In terms of projects I’m working on and off on a DSPy-inspired library for text-based gradient descent, but my main priority is fixing my environment. I’ve learned it’s just extremely mentally taxing to something like this by yourself. Starting a small Discord community with friends I met from Twitter and other corners of the internet certainly helped, but I think I need to find similar people IRL, ideally in London where I intend to move very soon. Definitely let me know if you want to meet up there.

Otherwise, cya in another few months, fingers crossed for some concrete WINS.